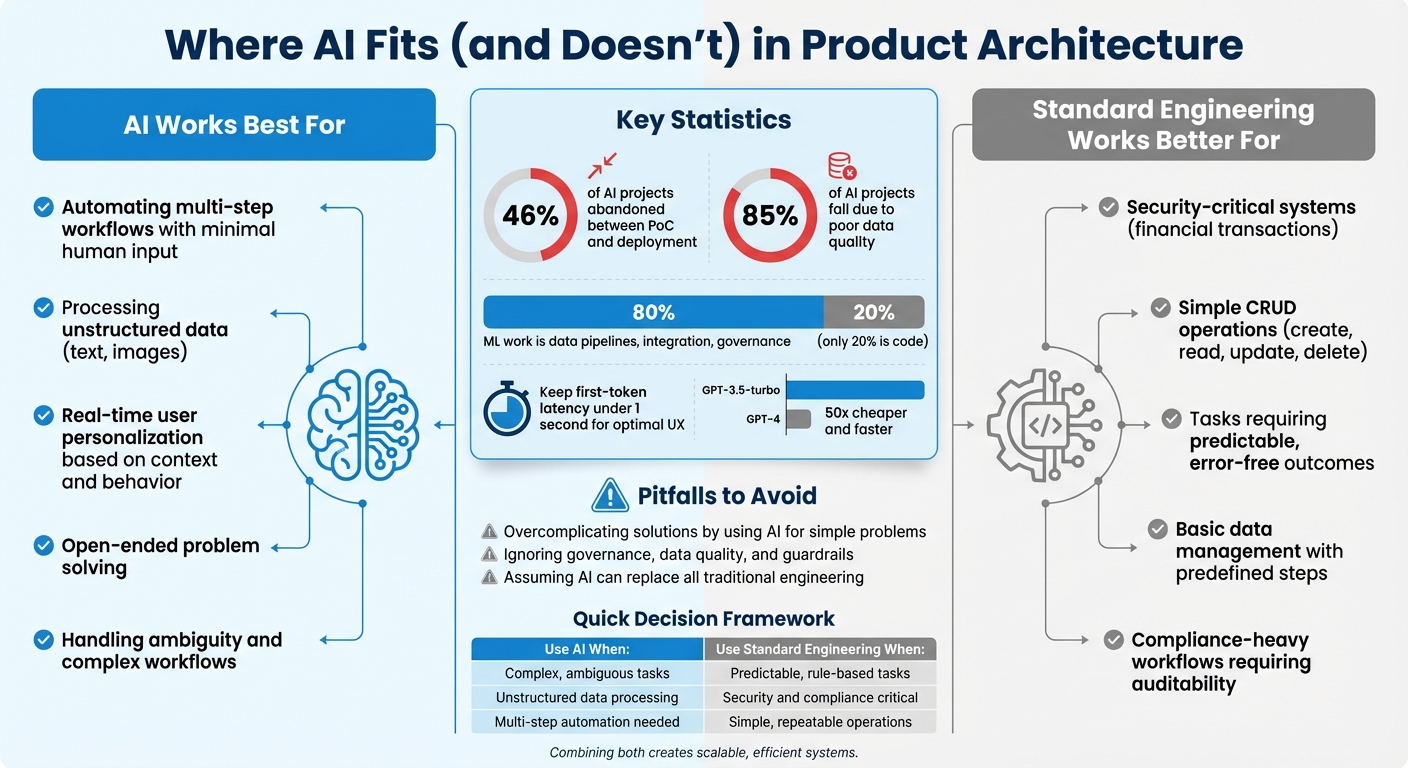

AI is reshaping how products are built, but it’s not a one-size-fits-all solution. Here’s the key takeaway: AI excels at handling ambiguity, unstructured data, and complex workflows, while standard engineering is better for predictable, rule-based tasks. Combining the two creates scalable, efficient systems.

Key Points:

- AI Works Best For:

- Automating multi-step workflows with minimal human input.

- Processing unstructured data like text or images.

- Real-time user personalization based on context and behavior.

- Standard Engineering Works Better For:

- Security-critical systems like financial transactions.

- Simple CRUD operations (create, read, update, delete).

- Tasks requiring predictable, error-free outcomes.

- Pitfalls to Avoid:

- Overcomplicating solutions by using AI for simple problems.

- Ignoring the importance of governance, data quality, and guardrails.

- Assuming AI can replace all traditional engineering methods.

Actionable Steps:

- Start small: Test AI on a single workflow or use case.

- Use a layered architecture: Separate UI, logic, models, and data.

- Optimize costs: Use smaller models for simple tasks and advanced ones for complex reasoning.

- Build governance frameworks: Ensure data lineage, privacy, and compliance.

By integrating AI thoughtfully, you can improve efficiency without overengineering your systems.

AI vs Standard Engineering: When to Use Each Approach in Product Architecture

AI Product Architecture: How to Build Production-Ready Gen AI Systems (Part 1)

Where AI Works Best in Product Architecture

AI has come a long way from the rigid, rule-based systems of the past. Its real strength lies in handling ambiguity, unstructured data, and complex, multi-step workflows – areas that would otherwise demand extensive conditional logic. By leveraging AI’s probabilistic reasoning, you can tackle tasks that thrive on flexibility and adaptability. Below, we’ll explore specific areas where AI shines in product architecture.

Workflow Optimization and Automation

One of AI’s standout capabilities is its ability to manage and streamline multi-step workflows autonomously. Instead of relying on static if-then rules, AI leverages autonomous agents to monitor, adjust, and integrate with external systems seamlessly [5].

These systems often utilize microservices – like RAG retrievers, summarizers, and data pipelines – that work in parallel [8]. Picture a "Manager" agent coordinating a team of specialist agents: one updates the CRM, another sends out emails, and a third queries your database. This modular setup not only simplifies maintenance but also allows tasks to be executed simultaneously, boosting efficiency.

The orchestration layer plays a crucial role here, automatically handling tool routing and managing error fallbacks. For example, if an AI gateway detects high latency on a specific large language model (LLM) endpoint, it can reroute traffic to an alternative endpoint without interruption [2][8]. For workflows requiring real-time responsiveness, aim to keep first-token latency under 1 second – latencies beyond 2 seconds can noticeably degrade user experience [2].

Next, let’s examine how backend automation can speed up development.

Backend Automation for Faster Development

AI is a game-changer for backend development, automating repetitive coding tasks and simplifying data pipeline management. A smart approach involves using a tiered model strategy: deploy cost-efficient models like GPT-3.5-turbo for high-volume, straightforward tasks, and reserve more advanced reasoning models for complex problem-solving [9][7].

Automated data pipelines are another key feature, transforming, chunking, and embedding data to support multiple AI applications through a unified backend [8][2]. This is especially beneficial for startups building Minimum Viable Products (MVPs), where speed often takes precedence over perfection.

"The core idea of in-context learning is to use LLMs off the shelf… then control their behavior through clever prompting and conditioning on private ‘contextual’ data." – Matt Bornstein and Rajko Radovanovic, Andreessen Horowitz [9]

To optimize costs and efficiency, implement semantic caching, which stores model outputs based on embedding similarity. This avoids redundant API calls for recurring queries and keeps expenses in check [2]. Additionally, version control for prompts and model settings ensures a smooth transition from prototype to production [8].

Real-Time Personalization in User Experience

AI has the ability to transform static user interfaces into dynamic, adaptive experiences. By analyzing real-time user behavior, context, and intent, AI can tailor content on the fly – adjusting for factors like location, device type, or user state – all without relying on manually set rules [10]. This adaptability integrates seamlessly with modern modular architectures to enhance user experience.

For voice-enabled products, maintaining Text-to-Speech latency under 250 milliseconds is crucial for natural, conversational interactions [2]. Streaming technologies like Server-Sent Events or WebSockets can deliver partial responses instantly, reducing perceived wait times while the backend completes its tasks [2].

The intelligence layer further enhances personalization by processing unstructured data from sources like chat logs and support tickets. This allows for scalable customization without the need to create separate logic paths for each user segment. Concurrent guardrails can also be deployed to ensure outputs meet privacy and brand safety standards – without adding extra latency [5].

Where Standard Engineering Works Better Than AI

AI shines in handling ambiguity and tackling complex processes, but there are situations where traditional engineering outperforms it. Understanding where each approach works best can help avoid costly errors and maintain a stable, secure product architecture.

Security and Financial Systems

In areas like financial transactions, authentication, and compliance-heavy workflows, predictability is non-negotiable. These systems need to ensure that the same input always delivers the same output. Unlike traditional engineering, AI operates on probabilities, which introduces uncertainty [6].

AI systems can pose significant risks in regulated industries like healthcare and finance. For example, opaque data flows and "hallucinated" outputs make AI decisions difficult to audit, potentially leading to compliance issues [1]. A study on generative AI in medical content revealed that only 7% of references were both valid and accurate, while 47% were entirely fabricated [6].

For these high-stakes environments, traditional engineering offers the transparency and strict data boundaries required to meet regulatory standards. It ensures every decision can be traced, helping define clear rules about what an agent can and cannot do. This approach minimizes risks like data leaks, runaway costs, and non-compliance [1].

Basic CRUD Operations

When it comes to simple data management tasks – creating, reading, updating, and deleting records – AI adds little value. These operations follow a set sequence of steps, making traditional coding far more efficient and cost-effective [3].

Core workflows such as login systems, payment processing, and approvals demand reliability. Using AI in these areas can introduce delays or disruptions, especially when AI generates inconsistent or unstructured outputs (e.g., "it depends" instead of a clear "yes" or "no"). Traditional engineering ensures these workflows remain fast and dependable, without the high computational costs or unpredictability of AI [12].

For tasks with predefined steps, sticking with traditional methods avoids unnecessary complexity and keeps systems running smoothly.

When AI Adds Unnecessary Complexity

Sometimes, using AI for straightforward problems can overcomplicate things. For instance, one team attempted to use a large language model to optimize household energy consumption, aiming for 30% savings. However, a simple scheduling rule – like running appliances after 10:00 PM – could have achieved similar results at a fraction of the cost and complexity [13].

"Generative AI isn’t an exception – its seemingly limitless capabilities only exacerbate the tendency to use generative AI for everything."

- Chip Huyen, Co-founder, Claypot AI [13]

The "80/20 trap" is a common pitfall. LinkedIn discovered that achieving 80% of an AI solution’s potential took just one month, but pushing beyond 95% required four additional months due to challenges like hallucinations [13]. Moreover, nearly half of AI projects (46%) are abandoned between the proof-of-concept phase and full-scale deployment, with the percentage of companies scrapping most of their AI initiatives jumping from 17% to 42% in just a year [12].

Instead of banking on future AI advancements to fix current issues, it’s smarter to build robust guardrails around existing model limitations [11]. If a simpler solution – like rule-based systems or direct API calls – gets the job done, there’s no need to introduce AI [5]. Start with straightforward tools: use term-based retrieval before vector databases, and experiment with basic prompts before diving into fine-tuning [13].

These examples highlight the importance of choosing the right tool for the job. A balanced approach – leveraging AI where it excels and relying on traditional engineering where it’s more effective – ensures better outcomes.

| Scenario | Preferred Approach | Reason |

|---|---|---|

| Financial Transactions | Standard Engineering | Predictability, auditability, and zero-error tolerance [1] |

| Basic CRUD Operations | Standard Engineering | Cost-effective and efficient for predefined tasks [3] |

| Security-Critical Logic | Standard Engineering | Ensures compliance and maintains audit trails [1] |

| Open-ended Problem Solving | AI | Handles complex, knowledge-driven tasks [3] |

| Real-time Personalization | AI | Processes large datasets to optimize personalization |

Choosing the simplest and most effective solution for each scenario helps maintain a solid and reliable system architecture.

sbb-itb-51b9a02

How to Integrate AI Into Your Product (Without Overcomplicating It)

Keep things straightforward and only add complexity when it’s genuinely necessary for your business. Many startups make the mistake of overengineering their AI systems before figuring out what actually works.

The I.D.E.A.L. Framework for AI Integration

AlterSquare follows a five-step process to integrate AI while avoiding unnecessary complications:

- Ideation: Test AI concepts quickly with prototypes before committing significant resources. Focus on specific use cases like automated email routing or content summarization to confirm they address real problems.

- Development: Build AI features step by step, using agile methods, instead of trying to launch everything at once.

- Evaluation: Tie technical metrics (like hallucination rates or latency) to business goals (such as reducing customer support times) using the OGSM framework [15].

- Architecture: Design AI systems to fit seamlessly with existing infrastructure. Use a layered approach to separate UI, logic/routing, models, and data handling.

- Lifecycle Support: Continuously improve AI performance after launch, using real-world user data.

Start with prompt engineering for general reasoning tasks. Then, move to Retrieval-Augmented Generation (RAG) for specific or up-to-date data. For more advanced needs, explore agent-based workflows for multi-step automation, and only consider fine-tuning for specialized styles or niche terminology.

"The journey to a production-grade generative AI application begins long before the first line of code is written. It starts with a strategically sound proof of concept (PoC)." – AWS Prescriptive Guidance [15]

Before writing any code, focus on data readiness. Build accurate datasets and validate data lineage to ensure your AI relies on reliable, current information. Poor data quality is a major reason why 85% of AI projects fail. Interestingly, machine learning code typically accounts for just 20% of the work, with the other 80% going into data pipelines, integration, and governance [14].

This structured method helps determine when to use AI versus sticking to traditional engineering methods.

When to Use AI vs. Standard Engineering

The choice between AI and traditional engineering depends on the type of problem you’re solving. Traditional engineering is ideal for tasks with clear steps and predictable outcomes, like payment processing, authentication, or CRUD operations. These tasks produce consistent results from the same input. AI, on the other hand, is better suited for open-ended problems requiring nuanced judgment, unstructured data processing, or autonomous decision-making. For example, AI can analyze conversation history and sentiment to route customer support inquiries, while traditional engineering handles ticket creation and assignment.

Maximize the potential of a single AI agent first. Use structured outputs, like strict JSON schemas, to connect with traditional engineering components. Before moving to multi-agent systems, set up guardrails like PII redaction, safety checks, and relevance validation. Additionally, design for human involvement by creating scenarios where failures can be handed off to human operators.

The following tools can support both AI and traditional engineering, ensuring a balanced approach.

Recommended Tools for AI Development

For orchestrating workflows, frameworks like LangGraph, Haystack, and the OpenAI Agents SDK manage the flow between AI reasoning and API calls, while also handling state management and session history. On the intelligence side, tools like vLLM, TensorRT-LLM, or Triton Inference Server optimize model performance. Use model routing to balance costs and speed – direct simple queries to smaller models and reserve complex reasoning for larger ones. For example, GPT-3.5-turbo is about 50 times cheaper and faster than GPT-4, making it a practical choice for scaling applications [9].

For managing data and knowledge, vector databases like Milvus, Qdrant, Weaviate, or pgvector are excellent for RAG implementations. Semantic caching can also reduce costs and latency by reusing model outputs for similar queries. Observability tools like OpenTelemetry, Prometheus, and Grafana are essential for tracking user requests across retrieval, model, and tool spans, especially in hybrid systems where AI and traditional engineering intersect.

Begin with top-tier models to establish performance benchmarks. Prototypes built with advanced models (like GPT-4o or o1) help define what "good" looks like. Once benchmarks are set, you can optimize for cost by exploring smaller models. Use structured outputs and adaptable prompt templates with policy variables instead of hard-coded prompts to simplify maintenance and evaluation.

| Layer | Recommended Tools |

|---|---|

| Orchestration | LangGraph, Haystack, OpenAI Agents SDK |

| Intelligence | vLLM, TensorRT-LLM, Triton Inference Server |

| Data/Knowledge | Milvus, Qdrant, Weaviate, pgvector |

| Observability | OpenTelemetry, Prometheus, Grafana |

"AI doesn’t replace weak processes: it exposes and amplifies them." – Vittesh Sahni, Sr. Director of AI at Coherent Solutions [14]

Tools alone can’t fix broken workflows. Focus on building strong data pipelines and clear integration points before adding AI. AlterSquare’s method emphasizes operational readiness, addressing data retrieval challenges before diving into advanced prompts. Often, improving data quality is the key to unlocking better performance in RAG systems.

Making Smart Decisions About AI in Product Architecture

Key Takeaways for Founders and Product Teams

When integrating AI into your product, start by focusing on clear business outcomes. The OGSM framework can help you connect high-level objectives – like improving customer support efficiency or increasing sales productivity – to specific technical metrics such as answer accuracy, hallucination rates, and response latency [15]. For instance, you might set goals like reducing customer service handle time by 30% or increasing qualified leads by 15% to guide your AI initiatives [15].

Before diving into AI, consider whether a simpler, rule-based approach might do the job. If a problem can be solved reliably with deterministic methods, these are often more cost-effective and less complex than AI [4][5]. This approach aligns with the principle of combining AI with traditional methods where appropriate. Traditional engineering excels at handling predictable tasks like payment processing or CRUD operations, while AI shines in more open-ended scenarios requiring nuanced judgment or unstructured data analysis.

Adopt a tiered model strategy to optimize performance and cost. Start with high-performance models to set a quality benchmark, then explore smaller, faster models for routine tasks [8][5]. Use an AI Gateway to control token usage, enforce limits, and manage costs [8]. Breaking down complex AI tasks into smaller, modular components can also improve system resilience [8].

When running a proof of concept, define clear exit criteria. Set thresholds for quality, speed, and cost at the outset. If these standards aren’t met, be ready to pivot or halt the initiative to avoid wasting resources [15]. Keep in mind that having clean and well-documented data is often more critical than the choice of AI model. Validate your datasets and ensure data lineage is in order before development begins.

By following these steps, you can ensure that AI is deployed only where it adds measurable value, complementing traditional engineering methods.

How AlterSquare Can Help

AlterSquare’s I.D.E.A.L. Framework builds on these principles, helping businesses align AI initiatives with their goals through rapid prototyping and agile development. By tying technical metrics to business outcomes using the OGSM framework, they design AI systems that integrate seamlessly with your existing infrastructure.

Whether you’re looking for AI-driven solutions to automate workflows, product engineering to create scalable architectures, or architecture modernization to upgrade legacy systems, AlterSquare provides CTO-level guidance and hands-on support. Their software consulting services help you evaluate whether to build or buy solutions, ensuring AI is only used where it adds real value. For founders navigating AI’s complexities, AlterSquare offers the strategic insight and technical know-how to make smart, ROI-driven decisions about where AI fits – and where it doesn’t – in your product architecture.

FAQs

How can AI work alongside traditional engineering in product architecture?

AI has the potential to elevate traditional engineering by taking over complex, data-heavy tasks, all while maintaining the solid structure and dependability of established systems. When integrated thoughtfully into workflows, AI can improve areas like process optimization, backend automation, and creating more responsive user experiences. The key to making this work lies in modular, scalable designs that ensure AI components – such as APIs, data pipelines, and microservices – fit smoothly into existing systems without disruption.

That said, introducing AI isn’t without its hurdles. Challenges like technical debt, unpredictable system behavior, or gaps in team expertise can arise. To navigate these issues, it’s important to strike a balance. Marry AI’s strengths with traditional engineering practices, such as thorough testing, well-defined boundaries, and incremental improvements. This approach allows teams to harness AI’s potential while keeping systems transparent, reliable, and firmly under control.

What mistakes should I avoid when adding AI to an existing system?

When adding AI to existing systems, a frequent misstep is relying on AI in situations where simpler, more traditional solutions would suffice. This not only complicates processes but can also lower efficiency. It’s equally important to recognize that subpar outcomes might be due to flaws in the product’s design, not the AI itself. Careful evaluation and ongoing human oversight are crucial to avoid this.

Another common issue is jumping into overly complex AI architectures right from the start. These can make scaling and maintaining systems much more difficult. Instead, it’s wiser to begin with straightforward, reliable solutions. Neglecting to thoroughly analyze current workflows and bottlenecks is another pitfall – it often results in AI implementations that fail to address actual challenges. Lastly, having robust data strategies and safety protocols in place is essential to ensure reliability and reduce risks in production environments.

By steering clear of these common mistakes, AI can genuinely improve your systems without creating unnecessary obstacles.

When should traditional engineering methods be used instead of AI in product development?

When it comes to certain projects, traditional engineering methods can often be the smarter and simpler choice. This is especially true when incorporating AI would unnecessarily complicate things, introduce risks, or create inefficiencies. For example, AI systems can sometimes behave unpredictably, demand higher levels of maintenance, or require specialized skills that your team might not have. In such cases, sticking with traditional approaches tends to be more reliable and easier to handle.

Traditional methods also shine in tasks that are straightforward or well-understood – like basic workflows or features that don’t change dynamically. These approaches are generally more efficient and cost-effective for such scenarios. On the other hand, AI proves its worth when tackling complex challenges, automating adaptive processes, or delivering a significantly better user experience. But if the potential benefits of AI don’t clearly outweigh its challenges, it’s usually best to stick with tried-and-true engineering techniques.

Leave a Reply